Hi, this is David(@tianweiliu) from MeetsMore’s Site Reliability Engineering team.

In MeetsMore, we have been using Elastic Container Service provided by Amazon Web Services for hosting all of our services. Recently, we have also started using Elastic Kubernetes Service (AWS managed Kubernetes), and we are planning to migrate all our services to EKS to get the benefits of having all the publicly available helm charts(collection of yaml templates that can be rendered into kubernetes manifests to run applications) for our growing need of hosting third party software, automated bin-packing and on-demand compute provided by Karpenter(just-in-time node provisioner), powerful auto-scaling via Keda(event driven autoscaler), and more.

- Declarative GitOps

- Declaring Argo CD itself

- Declaring third-party applications

- Declaring applications we built

- The downside

Declarative GitOps

Before we get full steam ahead on migrating all of our applications to EKS, we need a reliable and easy to follow process to maintain everything now and in the future.

Modern DevOps practice led us to declarative Infrastructure-as-code (CDKTF) to manage our infrastructure, and we have already experienced many benefits from it; Our codebase is the single source of truth of all of our resource configurations which are clearly defined and version controlled.

Kubernetes and Helm are clearly built on the same principle. However, we all experienced the messiness of juggling manifest files and not knowing what was modified, and helm’s greatness on being a templating engine but falling short on maintaining a robust lifecycle on deploying and rolling back.

Application definitions, configurations, and environments should be declarative and version controlled. Application deployment and lifecycle management should be automated, auditable, and easy to understand.

Argo CD looks like the next generation declarative application management that we need, which have already solved all the issues mentioned above, and can potentially make our EKS a fully automated, self-serve compute platform.

Declaring Argo CD itself

The problem

The first issue we faced was a classic chicken and egg problem. We would really want Argo CD to be publicly available, encrypted with HTTPS using trusted SSL certificate, gated by Single Sign-On with authorization using the groups we already had in our identity provider, using secrets from somewhere safe. We would definitely use tools like external-dns, cert-manager and external-secrets. But if those were installed first and managed without Argo CD, we would clearly lose all the benefits of having Argo CD in the first place.

The solution

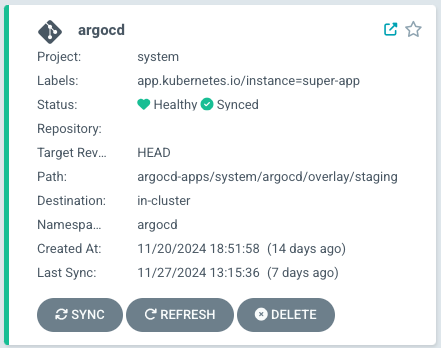

The solution is to mange Argo CD with Argo CD itself:

- We install the most basic version of Argo CD following the official getting started guide first

- We port forward and login to the UI using the admin username and password

- Now we have a starting ground to install all the tools we talked about. (We started off installing them one by one manually, but now we have a collection of Argo CD Application manifests for them, we can install all of them in one go)

- Finally we have the Argo CD Application for Argo CD itself which points to all the kustomization we made on top of the official manifests, all stored in our GitHub repository.

- Once we load the Argo CD Application manifest into Argo CD UI, Argo CD is smart enough to know what are already installed in step 1 and what needs to be added and changed.

- After syncing Argo CD itself, our Argo CD is now exposed via ingress-nginx, with DNS automatically synced into Route53 by external-dns. The traffic is fully HTTPS using SSL certificate issued by Let’s Encrypt and managed by external-dns. Argo CD secrets are also synced directly with the OAuth, Slack secrets, etc. from our AWS Secrets Manager thanks to external-secrets.

Self-managed Argo CD Application

Declaring third-party applications

The problem

Although it felt very intuitive to deploy a helm chart directly from Argo CD’s UI by creating an Application and filling in the chart information, it is actually anti-pattern.

Why? Because it is very likely that we need some customizations on the helm values (or kustomize). If we do everything from the UI, we are not recording those custom values anywhere(apart from the UI or the Application manifest stored in the cluster).

It’s clearly not exactly declarative and we could lose everything if we accidentally delete the Application. Not mentioning that we also have a production cluster and we will have to do everything again.

The solution

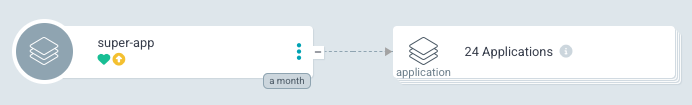

We need Application of Application, or even Application of Applications. To make it easier to understand, let’s call them super Application and child Application.

Since we want to record all the customization we made, we want to store the declaration of the final Argo CD Application (the child Application) in code as a yaml file. (We can also do kustomization on top of it to share most of the code, but allow me to skip that for now to avoid complicating things further)

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: external-secrets spec: project: system source: repoURL: "https://charts.external-secrets.io" targetRevision: 0.10.0 chart: external-secrets helm: values: |- podAnnotations: ad.datadoghq.com/external-secrets.logs: '[{"service": "k8s-external-secrets", "source": "external-secrets"}]' certController: podAnnotations: ad.datadoghq.com/cert-controller.logs: '[{"service": "k8s-external-secrets", "source": "external-secrets"}]' webhook: podAnnotations: ad.datadoghq.com/webhook.logs: '[{"service": "k8s-external-secrets", "source": "external-secrets"}]' destination: server: "https://kubernetes.default.svc" namespace: external-secrets syncPolicy: syncOptions: - CreateNamespace=true

Child Application is the Application, when synced, deploy the actual external-secrets helm chart with helm values overrides, and this is now recorded and version controlled in our code.

How do we use it? It all comes to the cleverness of Argo CD making everything a manifest.

This child Application manifest file can be deployed and synced from code repo using another Application(the super Application). The super Application only need to point Argo CD to the code repo and the path of the child Application manifest which will likely never be changed again.

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: external-secrets-super-app spec: project: default source: repoURL: "https://github.com/meetsmore/awesome-repo.git" targetRevision: HEAD path: path/to/child/application/manifest destination: server: "https://kubernetes.default.svc" namespace: argocd syncPolicy: syncOptions: - CreateNamespace=true

Notice the namespace is argocd instead of external-secrets in the super Application. As the one and only one purpose of the super Application is to deploy and sync the single manifest of the child Application, and all Argo CD manifests should be stored in the same namespace as Argo CD itself (otherwise, you will see 403 permission denied errors)

Now, when we want to modify the external-secrets custom helm values, or upgrade it to a newer chart version. We will modify the child Application manifest in our repository, and the super Application will pick up the change and notify us the super Application is out-of-sync, because the child Application manifest in the repo is different from the child Application deployed. If auto-sync is enabled on the super Application, the child Application will get updated automatically and if auto-sync is also enabled on the child Application, external-secrets will be updated automatically as well. The full Declarative GitOps automation.

Of course, we will not just have one external-secrets in our cluster that needs customization. We have many other applications that need to benefit from the same process. However, we get back to square one when we have one super Application for each child Application. We essentially just want one super Application to sync all child Applications (or Application of Applications). To achieve it is quite simple, since in Argo CD Application, the path should be a folder instead of a file, all the files within that folder will automatically be included. We simply just need to put all the child Application manifests into a single folder, and the super Application that points to that folder will deploy and sync all of them for us. Simple as that.

apiVersion: argoproj.io/v1alpha1 kind: Application metadata: name: super-app spec: project: default source: repoURL: "https://github.com/meetsmore/awesome-repo.git" targetRevision: HEAD path: path/to/all/child/application/manifests destination: server: "https://kubernetes.default.svc" namespace: argocd syncPolicy: syncOptions: - CreateNamespace=true

Declaring applications we built

The problem

We have figured out how to deploy application that other people built. What is missing to deploy the application we built? The answer is in the name. Argo CD only manages the Continuous Delivery part of the full GitOps lifecycle. We still need to “connect” it to the Continuous Integration part with GitHub Actions.

Yet again it is first instinct but anti-pattern to “connect” CI with CD as CD should be declarative, version controlled and automated. If Argo CD requires CI to tell it what to do, it means there are hidden logics in what we call “CI” that are actually CD, and causing Git and Ops to be tightly coupled. For example, when you want to rollback in Argo CD, but someone else just made a new commit, the CI pipeline did not know that there was a rollback and forced Argo CD to sync to a newer version.

However, we can’t be manually updating our manifest every time an update is needed though it is perfectly declarative. We will likely be back to coupled CI/CD after the second release.

The perfectly declarative but complicated solution

Let’s start with the ideal solution first, the idea solution is to separate application code with application manifests in different repositories:

- CI build artifacts(usually docker images) and then instead of controlling your CD, committing to your manifest repository like a human.

- Argo CD becomes out-of-sync when the manifest repository changed, and auto-sync makes that update happen automatically.

How is this different from CI triggering CD directly? Notice that the CI did not directly manipulate CD in any way, the only thing that CI did was to commit the new image tag into the manifest repo which a human would do for updating the application anyway. The lifecycle is still clearly defined, version controlled and fully controlled by Argo CD.

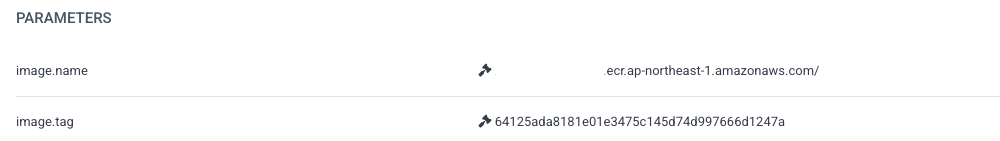

The simpler solution with argocd-image-updater

We now have CI that only builds new artifacts, and in most cases, only new images. But having CI to commit to a different repo with potential conflicts is hard. Gladly, the amazing open source community had already built a perfect solution. After setting up a IAM roles for service account to allow argocd-image-updater to watch our ECR and couple annotations on the Argo CD application manifest to tell argocd-image-updater the logic to watch for image tag changes. argocd-image-updater can automatically handle overriding the image tags in helm values or kustomize when the CI builds and upload a new image to ECR and even write it back to git.

The compromise we make to make it even simpler

You probably can already tell at this moment. The most difficult part of the entire lifecycle is the need of committing to the manifest repo when CI changes artifact.

Can we skip that part?

No, as we wanted it to be declarative?

That’s where we make the compromise. By default, argocd-image-updater updates in argocd mode instead of git mode, which means that the parameter override is only applied in Argo CD on top of the Application manifest that is live, instead of writing the changes back to the manifest repo.

We see it as an reasonable compromise as it is okay for us to refer to Argo CD to check which image tag is deployed instead of looking at our manifest repo, and we never needed to manually update the manifest to change the image tag. argocd-image-updater uses parameter override which takes precedence over actual manifest in code, and is tracked by Argo CD to allow rollbacks.

The downside

Of course, using everything above is not all perfect. We do face some issues that we are actively trying to solve:

- Decoupling CI/CD means losing single source of reference on status.

- We are used to check CI pipeline to determine if a build was successfully deployed.

- But as we separated CD from CI pipeline, CI pipeline can only show the status of artifact building and the checks before it.

- Developers now need to check Argo CD or wait for the Argo CD message in slack to know that a build was(or was not) successfully deployed.

- Separating manifests into a different repository means losing the correlation of deployment(application manifest) hash and build(application code) hash.

- We are used to identify deployments with the same commit hash as a certain commit in application codebase. It is very intuitive for rolling back, “I made a mistake in this commit, I should roll back to before this commit hash.”

- However, now the manifests are separated into a different repository, the deployment hash reflects the manifest commit hash and the build hash reflects the code commit hash.

- The relationship between the two hashes has to be determined by either checking in Argo CD history(if there is no write-back) or the file commit history(if there is write-back)

- CD could need complex timing control since we have not migrated everything to EKS yet.

- For example, we are still using the old pattern of triggering a database migration task before deploying our server backend.

- We have another migration job that is newer and have already migrated to EKS.

- We just found a race condition that the database migration job might be affecting the other migration, therefore the other migration should run after the database migration.

- However, we have no way of easily controlling the other migration as it is automatically triggered by Argo CD via

argocd-image-updateron image updates. - Current solution:

- Monitor GitHub Action workflow inside the other migration using an initContainer and only exit the initContainer when database migration finishes

- Future solution:

- Migrate database migration to EKS as well, so the database migration can run as the initContainer of the other migration or just combine them into the same k8s job.

That was quite a lot to share. Thank you so much for reading!

Be sure to check out other articles from Advent Calendar written by my amazing colleagues here at MeetsMore.

See you next time!